Building a rich reservoir of Gurmukhi

Overview of Project

The language of a region is an intrinsic expression of its culture. It is very well quoted- “Language is not just words. It’s a culture, tradition, a unification of a community, a whole history that creates what a community is.” It’s all embodied in a language.

Guru Angad Dev Ji invented the language ‘Gurmukhi’ for recording sacred literature. Gurmukhi means “from the mouth of the Guru.” Owing to increasing western influence Gurmukhi is losing its identity. Protection of our Punjabi culture viz language is imperative.

Project Goals

- Creating an NLP toolkit for Punjabi

- Building a Punjabi Chatbot

- Building a Contextual Search Engine on Sri Guru Granth Sahib Ji

- Developing a Digital Punjabi Shabdkosh

- Translating Speech to text in Punjabi

Punjabi Speech to Text

Among all Indic languages, the most worked upon languages in the sector of speech recognition include Hindi, Bengali, etc. Punjabi is found in the lower ranks of this list. Moreover, the speech corpora of these languages can be obtained easily on public domain which is again not the case for Punjabi. Taking this into consideration, a Speech Data Collection app was introduced by Sabudh which allows volunteers to contribute towards building a rich resource for the same by recording short utterances of Punjabi literature.

The aim behind creating this platform is to create a publicly available “speech to text” corpus to fuel research and development of Automatic Speech Recognition (ASR) models for the Punjabi/Gurmukhi language. The web application can be accessed at panjabi.ai

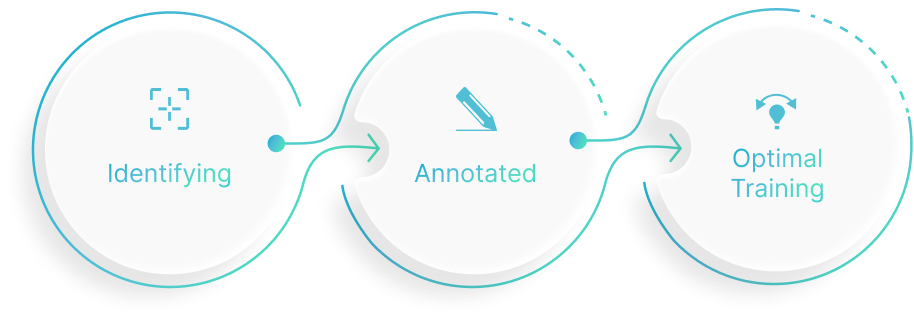

Along with Data acquisition, state-of-the-art methods are being brought into the application for obtaining an optimal Speech to Text pipeline specifically for Punjabi.

Natural Language Processing – Toolkit

Natural Language Processing is a cumulative term that cites automated processing of human language. India, without a doubt, is rich in languages but is relatively poor in digitalized resources for these languages. This makes building such an NLP kit even more problematic. This toolkit would be aiming for including language models trained on Punjabi text corpora, these models would include Named entity Recognizer, Relationship classifier, etc.

In order to train such models, a plethora of annotated datasets is required customized for every module respectively. Doccano is one of the most versatile annotator for Natural Language Processing datasets, an instance of which is set up on the server for custom annotation.

Data collection: The data up and running is sourced through some Punjabi news articles, a Punjabi grammar book, and a couple of FIR Data reports (image data which was converted to textual counterpart using Optical Character Recognition).

AIM

Contextual Search Engine on Gurbani

A context-based search system for Punjabi text uses a deep learning approach to perform a search in the collection of Punjabi documents and finds the documents that are similar in the context of the search query. The result of such a search is more relevant to users than the results of systems that rely solely on word-to-word matching.

Custom lemmatizer is needed for Gurbani because of its rich vocabulary consisting of words from various languages and dialects. The goal is to develop an algorithm that uses concepts of artificial neural networks with multiple layers to convert the text documents into word embeddings which in turn can be used to calculate the similarity between the documents.